I first learned about computer programming in 8th grade. There was a math teacher at our high school with a Ph.D. Why Dr. Jackson taught at a high school, I have no idea. He had worked out an arrangement to teach some math classes about programing in Fortran. Fortran was one of the first high level programming languages. The name stood for FORmula TRANslation. It was really only well suited to mathematical work. The details are fuzzy now, but I am not sure if it had subroutines. It did have an absurd variable naming convention. Variables starting with the letters I through N defaulted to INTEGER. Other variable names were defaulted to REAL (floating point numbers). You can see the manual for FORTRAN IV variant for the IBM 1401 mainframe here.

Dr. Jackson mostly had use filling in coding forms used for keypunching into Hollerith cards. I have no memory of really understanding what these statements were supposed to do, but learning is often at first "Monkey see, monkey do." We then went to the high school, and used an IBM 029 keypunch to punch in the statements.

Hollerith cards were 80 columns wide.

There were several rows going across. 0-9, a 12s row, and I think another one where the keypunch knocked little square holes into card stock. A program consisted of a few cards or many boxes (2000 cards per box).

The IBM 1620 mainframe that the high school had read these cards in a card reader, a descendant of the sorting devices Hermann Hollerith created to do the 1890 census tabulation before they started the 1900 census.

I have no idea what that program was supposed to do, but it was written in FORTRAN II.

By the time I again became aware of computers, I was in 10th grade. My friend Louis Watanabe was trying to distract me from a completely unhealthy infatuation with a very smart girl named Holly Thomas who was in my 9th grade German class. My grades improved from B- to B+ within a few weeks of my unhealthy interest in Holly, then consistently As in high school. Anyway, Louis had been one of Dr. Jackson's students who actually took to computers and later became rich at Microsoft.

The high school had upgraded to an IBM 1401 mainframe.

It was a monster with 4K of core memory in that CPU, and another 12K in an external washing machine sized unit. Core memory actually stored binary data in little magnetized iron cores.

Yes those wires set and read the bits. Core memory was non-volatile.

Hard disks? Of course.

Yes that is a removable drive. Each could hold 3MB.

My first uses of the 1401 computer was to write FORTRAN IV programs to calculate magnification and field of view of various eyepieces for my 8" reflector (which I still have, 45 years later). Eventually, I learned to program in the 1401's machine language. What is that?

First a little unbelievable technical discussion. Core memory was organized utterly differently than today. Each byte of memory was 8 bits like today. Memory addressing was decimal. After adress 100, address 101. It was actually organized that way; not an artifact of hiding hexadecimal from us. The encoding scheme was EBCDIC: Extended Binary Code and Interchange Code:

ASCII? What;s that?

There was no concept of words. If you wanted to do a 100 digit by 100 digit multiply, you set what was called a word mark at the end of the fields. An add instruction would be literally the letter A followed by the address of the two fields. M for multiply, S for subtract, D for divide.

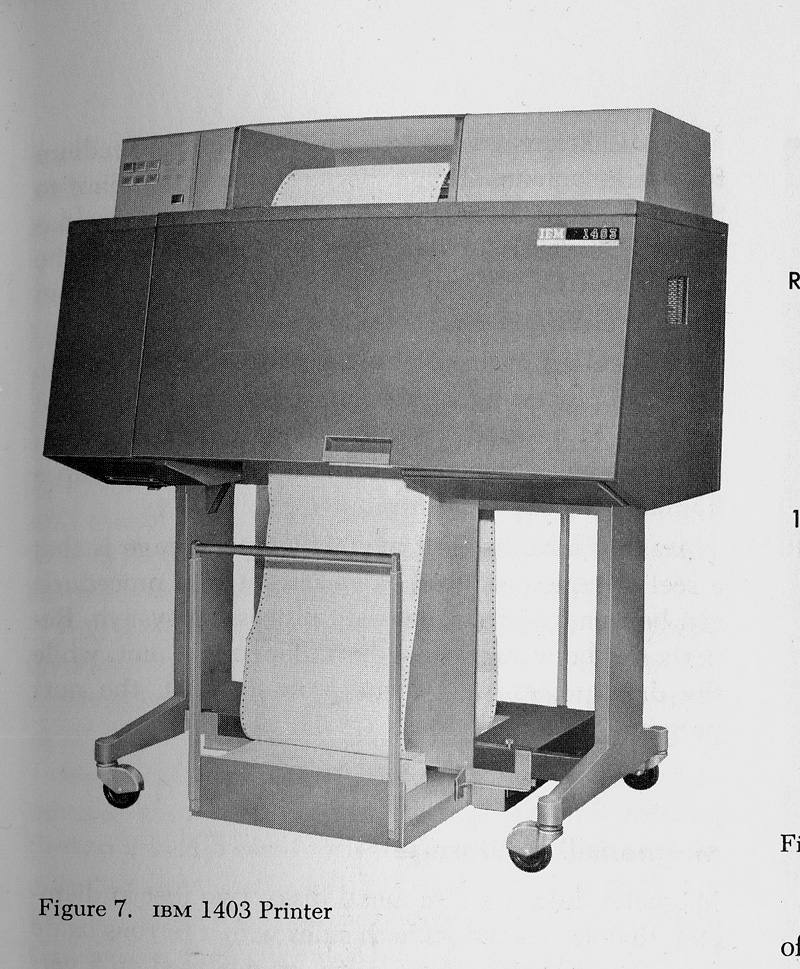

To read a card from the card reader into 000-080 the instruction was 1, to punch a card from 100-180, 2, to print from locations 201-323, 4. Oh yes, the printer: an IBM 1403 line printer:

It used fanfold paper transported through the printer by those holes in the side:

and usually "green bar" paper. These were very wide sheets. They could print at what seemed insane speeds until I saw my first laser printer. All upper case.

These were impact printers in every worst sense of the word. They were loud enough that you closed the cover when printing; they would probably be an OSHA violation today. There was a program that you could run that rang the hammers against the type to play "Jingle Bells."

My first exposure to a computer was with Maniac I at University of New Mexico (I was in 7th or 8th grade). I played nim with it. I had no idea how it worked, but it filled a good sized building with racks full of vacuum tube circuitry, and its memory was a cathode ray tube, where the charge written by a electron beam was stored in a spot, as a bit.

ReplyDeleteMy first algebra was boolean algebra from a programmed learning book my engineering professor father gave me. It was easy and fun, and I did that the year before junior high school algebra.

But my first programming was similar to yours, except my freshman year at college: FORTRAN on an IBM - in this case 7094. Then I got access to a 1620 that lived in a room at Stanford University. The base library, at the naval air base where I was an air crew member, happened to have a 1620 manual. So I would sneak up to Stanford in the middle of the night, get a janitor to let me into the 1620 room, and program it using the console switches.

A couple of years later I was working as a systems programmer on the OS internals of GECOS-III.

I volunteer to help edit the early computer stuff, because it's something I study, plus I started with FORTRAN "IV" on an IBM 1130, the successor to the 1620. Nits for now:

ReplyDeleteIt did have an absurd variable naming convention. Variables starting with the letters I through N defaulted to INTEGER.

Factor in that memory, logic and everything else were fantastically expensive when FORTRAN was developed. I.e. in vacuum tube computers, before transistors were reliable enough to use (IBM jumped right on them, BTW, including eventually the first? automated production system). So ... I wouldn't be surprised if FORTRAN I didn't even have a way to declare variable types, it was that early. But anyway, i, j, k were of course typical iterators in math, m and n as well I think.

The IBM 1620 mainframe

You are very funny, the 1620 had a code and nickname CADET, I think I remember there was some "space cadet" entomology, but it also stands for "Can't Add, Doesn't Even Try". This was from the era where IBM was still extremely clever and dedicated to providing "data processing" as inexpensively as possible. In competition for this lowest of low range computers, they had a brilliant idea for the 1959 Model I, put most of the hardware budget into a fairly fast core memory unit. That allowed them to skimp on a lot of very expensive logic (assume this is around the time that the transistors DEC bought on the open market had serial numbers and cost ~765 in 2017 dollars), e.g. it didn't have a normal ALU, it used tables in that memory for addition, subtraction, and multiplication.

the IBM 1401 mainframe

Again, you sense of humor know no bounds. This was the start of IBM's lowest cost line of business computers, to transition companies from punched card based data processing, and I think their first mass produced business computer (their scientific 650 was the first mass produced computer period, per the histories I'm depending on). It was followed by the System/3 in 1969 for data centers that couldn't afford mainframes. Quite a bit of clever design in this line of computers as well, and there was plenty of 1401 object code still being run when Y2K hit.

Core memory was organized utterly differently than today. Each byte of memory was 8 bits like today. Memory addressing was decimal.

That depended on the general family the computer belonged to. IBM's scientific ones used binary addressing, but they worked on 36 bit words, just enough to encode 10 decimal digits like the electromagnetical calculators human "computers" like the ladies Feynman organized at Los Alamos, that's enough precision for a great deal of scientific computing, and we humans just so happen to have 10 fingers ^_^. Plus a 6 bit character set was adequate for scientific computing (businesses had demanded upper and lower case earlier than this in pure punched cards systems if I remember correctly). So this is how the 701, 704 (the computer FORTRAN and LISP were developed on), the 709 and their transistorized followons worked.

Business computers, again given the extreme premiums on memory, logic, and being done by different groups, did decimal computing including addressing, and the 1401 is from that family. I scored a fast IBM 7 track tape drive for a student run computer center I started in 1980, it was from that line, had unit addressing out to 9.

Between the really big differences in the families, and a software crisis from too many wildly or subtlety different models, IBM bet the company on the System/360, which unified everything but the lowest end computers, including the 1620 and 1130 on the scientific side, and the 1401 and System/3 on the business side. That was perhaps the biggest force in making 8 bit byte computing the standard, and byte based addressing (although the convenience of the latter gave up a lot of address space when it made a difference).

I never actually wrote "original code" in FORTRAN, but I was called upon to maintain FORTRAN code, and I hated it.

ReplyDeleteI was a COBOL guy. And RPG, and three or six other languages whose names I have deliberately dis-remembered.

Once (mid-seventies) when I was working for a Bank/Computer Service Bureau in Oakland, the keypunch dollies (remember them?) mentioned during a coffee break that they had 10,000 cards to keypunch, and it was boring because most of it was redundant. I set some wires in the card punch machine to automatically punch the 'redundant' code, and also increment a card number as the header in the card.

This saved the 6-girl department about 3 days of BORING work; they were so grateful, they bought me a pint of Crown Royal whiskey and invited me out to the parking lot to drink it.

I took the whiskey, but declined the parking-lot offer; I wanted NOT to explain to my wife what I was doing with three girls and a pint of whisky in a 1966 Ford Fairlane in the company parking lot during the lunch break.

It didn't take long for me to lose my skill-set for rewiring card punch machines. But I took the Canadian Whiskey.

B-i-L wrote a version of Forth, and sold it mail order, around 1980. I recall Byte Magazine rated ForthST the best of the ~dozen available then. At some point he seemed to loose interest in it. Years later, he was a founder of the first company that got the IoT's working. (say hello to "1984" implementation ability!)

ReplyDeleteIn 1969 at the SAC HQ, I think it was 8th AF, there was a drum printer. It would print all the same characters on a line at the same time. The lines of characters on the drum were curved due its speed. At full speed the paper would be over two feet out of the printer before falling over.

ReplyDeleteHoly stone-knives-and-bearskins, Batman.

ReplyDelete(The first computer I ever poked at was my dad's Exidy Sorcerer, with an S100 bus.

The first one I ever actually "used" properly was a C-64.

But ... 100khz cycles and BCD internals? Computers were WEIRD in the old days.)

Just a nit: EBCDIC stands for "Extended Binary-Coded Decimal Interchange Code".

ReplyDeleteMy life with computers started with coding Fortran IV on a DEC PDP-11 minicomputer with 16K words of memory, a DECTape drive, and a grand total of 10MB of disk storage. I started with this system in college, before moving on to the DECSystem 10. Assembly language was also taught on the PDP-11 which no doubt led to my later preference for the Motorola 68xxx processor series over the Intel x86 line. My first programs were done on Hollerith cards punched on IBM 029 card punches. I was happy to get to the point in class where I could move to the interactive terminals (24x80 text screen at 300 baud - we used to almost fight over the one 2400 baud terminal that was available).

By that time, the removable hard disks like the one you pictured could hold 25 MB, so we were just swimming in storage :-).

My first experience with computers was paper and pencil based. Parents couldn't afford a computer, and the school I went to didn't have them yet, but I *COULD* afford books, and I bought "BASIC Computer Games" and "More BASIC Computer Games" by David Ahl, and spent hour after hour laying on the floor with the books, graph paper, a pencil, a calculator, and the dice I "borrowed" from the Yahtzee set, working out what the code did manually.

ReplyDeleteBecause it was in BASIC, it was pretty easy to figure out what did what.

I haven't heard another human being say (well, write) "EBCDIC" in decades. Gotta run, but more later, you awakened some memories.

ReplyDeleteWhen I took the PSAT in...1976 I think...I also had to put down what I wanted to study in college. I can't remember if it was fill in the blank or choose from a list, but in any case I thought about for maybe 10 seconds, decided "computers" seemed to be an up and coming thing, and thus began my computer career.

ReplyDeleteWe of course had no computers at our little rural school, thinks like PSAT forms and punch cards for classes were shipped off to some other place to be scanned, processed, and returned as paper reports.

One of the high school math teachers offered to teach a non-credit, extra-curricular class on programming, and about five or six signed up, all boys as I remember. I think only a couple or three of us saw it all the way through. He gave us some books and some instruction in FORTRAN, we wrote out our programs in long hand, and then drove 20 miles to Indiana University where we had to compete with the college students for time on the key punches, then drop our little bundle of cards into the "inbox", wait for the operators, to through them in the hopper, then pick up the card deck and our green bar paper output in the "outbox" -- to find out that we had left out a comma or some such and the whole job aborted. Good times.

IU had a CDC 6600, which when I came back as an actual college student one of my comp sci professors told me (an AFROTC cadet) that it had been paid for by the USAF, who wanted it as a back up machine in case the one at Wright Patterson AFB ever got destroyed. The 6600 had 60 bit words, which seemed strange to me at the time.

When I graduated from the College of Arts and Sciences at IU, there were only two departments whose graduates had pretty much guaranteed jobs: Computer Science and ROTC. I had both bases covered and within a few months was on active duty with the 552 Airborne Warning and Control Wing -- the E-3 AWACS radar plane.

The ground support computer was an IBM 360/370, and the plane's computer was basically a smaller 360/370 as well, in flight garb. The early model CC-1 computers in the plane even had real core memory. Our training courses in IBM Job Control Language, 360/370 Assembler, and JOVIAL were done on punch cards, while "the real work" was done on CRTs and keyboards that were not all that old! The plane's computer was loaded with 9track tapes, at 800 bits per inch.

I found out that I had a pretty good grounding in computer language concepts of the day, it was not hard to pick up the new languages and systems, but I had never heard of JOVIAL, which turned out to be older than I was IIRC. All the air defense computers were programmed in it. It was kind of a cross between FORTRAN and COBOL and although it was a "high level" language you could do really evil things with it, like go directly in to assembler for a few lines and directly manipulate specific bits in memory and registers.

In 1986 I believe I ran across the first Apple Macintosh in a store in Oklahoma City and bought it on the spot. It was about $800, plus $450 for a one-sided 3.5 inch hard floppy drive. About a month's pay, I think, but I was astounded by the user interface.

I write all this and feel like a complete dinosaur!

My father worked for MITRE for a while. One of the machines he did a little work on, the Whirlwind, was on display at the Smithsonian when we visited when I was a kid. They had a pane of Core memory on display, and my dad told me something interesting about it. Core Memory is Destructive read. The process of determining the magnetic orientation of a core demagnetized it. So you would have to read the data, and re-write it again if you wanted to keep it.

ReplyDeleteI remember the cards, the card punch machine (I met it in college, and discovered that the more complicated the machine the harder the on-off switch was to find. I believe the on-off switch for that card punch was located under the keyboard about where your right knee was.

ReplyDeleteI also remember the girl who was the object of your infatuation, only I met her in IIRC Algebra 3. She has a sister who was a co-star in the Lee Majors series Fall Guy.

Replying to Mauser... The computer I used in college (GE-635) had core memory. It also was 36 bits, with 9 bits of instruction code and 9 bits of instruction modified. But, many instruction codes were not in the manual. So, I wrote a program to try every one, using an operating system facility provided to user code to catch the bad-instruction fault ("courtesy calls"). That was 2^18 tries - 262,500. When I ran it, apparently the core overheated, because the whole system crashed (taking down everybody's jobs), and it showed a parity error in memory right in the code that handled the bad-instruction fault. Oops.

ReplyDelete@Will... I wrote a FORTH back in the late '70s and sold it to a movie studio for motion control. FORTH was an amazing language - very primitive, but remarkably powerful. It was powerful sort of in the sense of LISP, except LISP was very computer-sciency (and first coded by Steve Russell, a friend), while FORTH was very down to earth, originally designed and written by a brilliant programmer who wanted astronomers to be able to write their own telescope control code (Charles Moore). Ultimately, LISP is more elegant, but FORTH is still amazing.